|

| Compound eye fabricated by Song et al. Photo from UIUC College of Engineering |

Browsing the post deadline papers, whose sessions will run from 8:00-10:00 pm this evening, it seemed the Applications and Technology session exhibited a zoological theme.

Fly in the Ointment:

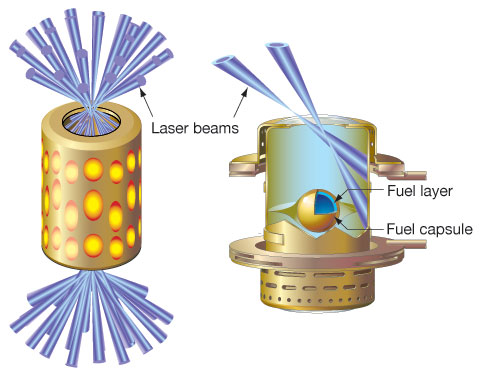

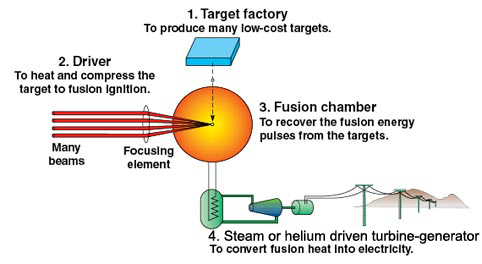

In postdeadline paper ATh5A.5, to begin at 8:48 pm, Song et al. will present work recently published in Nature on compound eye cameras that mimic the physiology of a fly's eye. Unlike human eyes or the eyes of other vertebrates, most animals use compound eyes that have many optical units (facets), each with their own lenses and set of photoreceptors. Though compound eyes lack the sensitivity and resolution of single-lens eyes which work by forming images on a detection plane, they can have infinite depth-of-field without the need to adjust the focal length of any of the lenses. Because of this, compound eyes are very adept at calculating/perceiving relative motion. A good set of compound eyes allows the fly high-precision navigation while in flight. Digital compound eyes therefore show great promise for micro air vehicles (MAVs) to be used for reconnaissance, sensing, and diagnostics in tight spaces (say locating people in a collapsed building, or flying inside and around machinery and other cramped environments with extreme conditions: high radioactivity, temperatures, etc).

What makes Song et al.'s work, a multi-institutional collaboration lead by the Beckman Institute of University of Illinois Urbana-Champaign, so compelling is that they make use of recent advances in stretchable electronics and hemispherical detector arrays to create a compact, monolithic, scalable compound eye. Essentially, the collaboration fabricated a planar layer of elastomeric microlenses and a planar layer of flexible photodiodes and blocking diodes that are aligned and stretched into a hemispherical shape. Serpentine-shaped metal interconnections on the electronics aid in flexibility. The Beckman Institute collaboration achieved near infinite depth-of-field and 160 degree field-of-view.

Crocodile Smile:

Postdeadline paper ATh5A.3, to begin at 8:12 pm, from Yang et al. of Case Western Reserve University actually addresses clinical diagnostics of human tooth decay using Raman imaging, though they image an alligator tooth to help demonstrate proof-of-concept (note all alligators are crocodiles so the cute colloquialism above is technically correct, albeit it is reaching a bit!).

Current clinical practices for dental caries (decay) lack early-stage detection. Late-stage cavities often require multiple fillings or more costly measures such as crowns, bridges, or even entire replacement of the tooth over the tooth lifetime because of the insufficiencies of x-ray and visual observation to detect lesions.

If tooth lesions could be detected early, they could be remineralized at an early stage of decay, thereby preventing future costly, invasive procedures.

Yang et al. use global Raman imaging that implements a 2D-CCD array and images at a single wavenumber over full-field of view rather than point inspection over a spectrum of wavenumbers. Their Raman images show a clear border between the dentin and enamel of an alligator tooth, showing high contrast in mineral signal intensity. They also show similar images for human teeth indicating their technique shows good promise for early clinical detection of tooth decay.